IRIS: Wireless Ring for Vision-based Smart Home Interaction

Maruchi Kim* Antonio Glenn* Bandhav Veluri*

Yunseo Lee, Eyoel Gebre, Aditya Bagaria,

Shyamnath Gollakota, Shwetak Patel

(* Equal contribution)

University of Washington

UIST '24: Proceedings of the 37th Annual ACM Symposium on User Interface Software and Technology

Demo: User Study Participant

Demo: System Explanation

Demo: Low-Power Between Gestures

Abstract

Integrating cameras into wireless smart rings has been challenging due to size and power constraints. We introduce IRIS, the first wireless vision-enabled smart ring system for smart home interactions. Equipped with a camera, Bluetooth radio, inertial measurement unit (IMU), and an onboard battery, IRIS meets the small size, weight, and power (SWaP) requirements for ring devices. IRIS is context-aware, adapting its gesture set to the detected device, and can last for 16-24 hours on a single charge. IRIS leverages the scene semantics to achieve instance-level device recognition. In a study involving 23 participants, IRIS consistently outpaced voice commands, with a higher proportion of participants expressing a preference for IRIS over voice commands regarding toggling a device's state, granular control, and social acceptability. Our work pushes the boundary of what is possible with ring form-factor devices, addressing system challenges and opening up novel interaction capabilities.

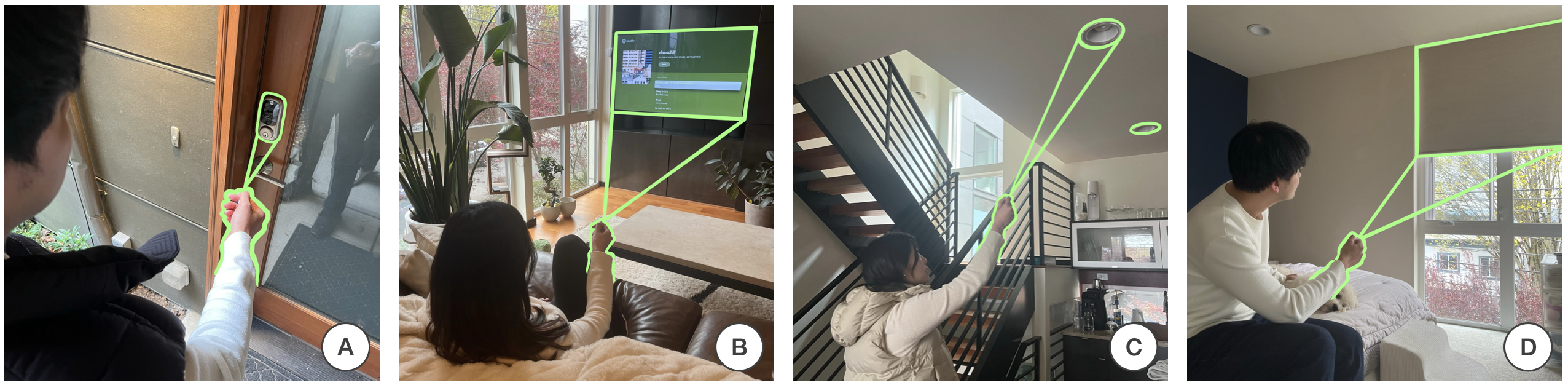

Smart home interaction with IRIS. (A) A user unlocks the front door by pointing and clicking IRIS at the smart lock. (B) Another user points IRIS at a television and rotates their hand to adjust its volume. (C) The user points IRIS at their living room lights to turn them off before leaving home. (D) A user points and clicks IRIS at the blinds to lower them for privacy.

IRIS hardware inside 3D-printed enclosure and when placed beside a quarter. The battery sits inside the band of the ring. The ring diameter and band thickness are 17.5 and 2.9 mm.

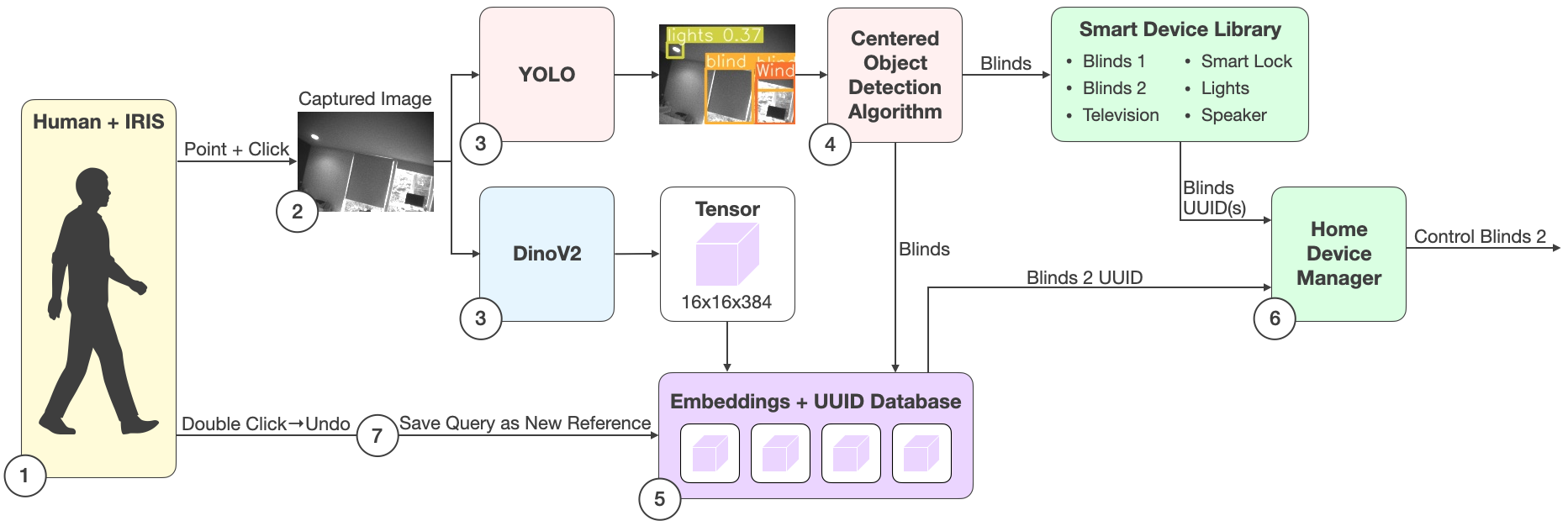

IRIS ML pipeline. A user points and clicks at the smart device they would like to control. (2) IRIS wirelessly streams the images to a smartphone, and (3) runs YOLO and DinoV2. (4) The centered object detection algorithm (CODA) filters out the multiple objects YOLO may detect and outputs the object closest to the center of the frame. IRIS then queries the smart device library, but since, in this example, there are two instances of Blinds in the home it stops here and utilizes the Dinov2 path. Next, the output embedding from Dinov2 is passed as input to (5) the Embedding + UUID database to find the embedding with the highest similarity, and the output of CODA is also passed as input to reduce the search space. The highest similarity corresponds to Blinds 2 UUID, and the (6) Home Device Manager controls Blinds 2.

Keywords: Smart ring, smart home, internet of things, ubiquitous computing, user interface, computer vision, low-power camera, wearable, interaction

Contact: iris@cs.washington.edu